In today’s fast-paced, highly concurrent world, building scalable and reactive applications is a necessity. Vert.x, a powerful toolkit for building reactive applications on the Java Virtual Machine (JVM), provides developers with an excellent framework to achieve this. In this tutorial, we will explore the fundamentals of Vert.x and guide you through building a reactive application from scratch.

Table of Contents:

- What is Vert.x?

- Setting Up the Development Environment

- Understanding Vert.x Core Concepts

- 3.1. Verticles

- 3.2. Event Bus

- 3.3. Asynchronous Programming Model

- Building a Simple Vert.x Application

- 4.1. Creating a Maven Project

- 4.2. Writing a Verticle

- 4.3. Deploying and Running the Verticle

- Scaling Vert.x Applications

- 5.1. Vert.x Clustering

- 5.2. High Availability

- Integrating with Other Technologies

- 6.1. Working with HTTP and WebSockets

- 6.2. Integrating with Databases

- 6.3. Reactive Messaging with Apache Kafka

- Unit Testing Vert.x Applications

- 7.1. Vert.x Unit Testing Framework

- 7.2. Mocking Dependencies

- Deploying Vert.x Applications

- 8.1. Packaging Vert.x Applications

- 8.2. Running Vert.x on Docker

- 8.3. Deploying to the Cloud

- Monitoring and Debugging Vert.x Applications

- 9.1. Logging and Metrics

- 9.2. Distributed Tracing with OpenTelemetry

- Conclusion

Section 1: What is Vert.x?

Vert.x is an open-source, reactive, and polyglot toolkit designed for building scalable and high-performance applications. It provides a powerful and flexible framework for developing event-driven and non-blocking applications on the Java Virtual Machine (JVM). Vert.x enables developers to create reactive systems that can handle a large number of concurrent connections and process events efficiently.

At its core, Vert.x embraces the principles of the Reactive Manifesto, which include responsiveness, scalability, resilience, and message-driven architecture. It leverages an event-driven programming model, allowing developers to build applications that are highly responsive to incoming events and messages.

Key Features of Vert.x:

- Polyglot Support: Vert.x supports multiple programming languages, including Java, Kotlin, JavaScript, Groovy, Ruby, and Ceylon. This flexibility allows developers to leverage their language of choice while benefiting from Vert.x’s features.

- Event Bus: The Vert.x event bus enables communication and coordination between different components of an application, both within a single instance and across distributed instances. It supports publish/subscribe and point-to-point messaging patterns, making it easy to build decoupled and scalable systems.

- Asynchronous and Non-Blocking: Vert.x promotes non-blocking I/O operations and asynchronous programming. It utilizes a small number of threads to handle a large number of concurrent connections efficiently. This enables applications to scale and handle high loads without incurring the overhead of traditional thread-per-connection models.

- Reactive Streams Integration: Vert.x seamlessly integrates with Reactive Streams, a specification for asynchronous stream processing with non-blocking backpressure. This integration allows developers to build reactive applications that can handle backpressure and efficiently process streams of data.

- Web and API Development: Vert.x provides a rich set of APIs and tools for building web applications and RESTful APIs. It supports the creation of high-performance HTTP servers, WebSocket communication, and the integration of various web technologies.

- Clustering and High Availability: Vert.x offers built-in support for clustering, allowing applications to scale horizontally by running multiple instances across multiple nodes. It provides mechanisms for event bus clustering, distributed data structures, and failover, ensuring high availability and fault tolerance.

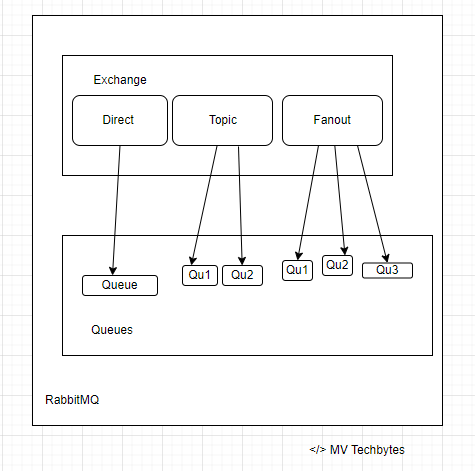

- Integration Ecosystem: Vert.x integrates with various technologies and frameworks, including databases, messaging systems (such as Apache Kafka and RabbitMQ), reactive streams implementations, service discovery mechanisms, and more. This enables developers to leverage existing tools and services seamlessly.

Vert.x is well-suited for developing a wide range of applications, including real-time systems, microservices, APIs, IoT applications, and reactive web applications. Its lightweight and modular architecture, combined with its reactive nature, makes it an excellent choice for building scalable and responsive applications that can handle heavy workloads and concurrent connections.

Whether you’re a Java developer or prefer other JVM-compatible languages, Vert.x offers a powerful toolkit to create reactive, event-driven applications that can meet the demands of modern distributed systems.

Section 2: Setting Up the Development Environment

Setting up the development environment for Vert.x involves a few essential steps. Here’s a step-by-step guide to getting started:

Step 1: Install Java Development Kit (JDK)

- Ensure that you have the latest version of JDK installed on your system. Vert.x requires Java 8 or higher. You can download the JDK from the Oracle website or use OpenJDK, which is a free and open-source alternative.

Step 2: Install Apache Maven (optional)

- While not mandatory, using Apache Maven simplifies the management of dependencies and building Vert.x projects. You can download Maven from the Apache Maven website and follow the installation instructions specific to your operating system.

Step 3: Set up a Project

- Create a new directory for your Vert.x project. Open a terminal or command prompt and navigate to the directory you just created.

Step 4: Initialize a Maven Project (optional)

- If you chose to use Maven, you can initialize a new Maven project by running the following

mvn archetype:generate -DgroupId=com.example -DartifactId=my-vertx-app -DarchetypeArtifactId=maven-archetype-quickstart -DinteractiveMode=false

This command creates a basic Maven project structure with a sample Java class.

Step 5: Add Vert.x Dependencies

Open the pom.xml file in your project directory (if using Maven) and add the following dependencies:

<dependencies>

<dependency>

<groupId>io.vertx</groupId>

<artifactId>vertx-core</artifactId>

<version>4.1.1</version>

</dependency>

</dependencies>

This configuration adds the Vert.x core dependency to your project.

Step 6: Build the Project (optional)

- If you’re using Maven, you can build your project by running the following command:

This command compiles your code, resolves dependencies, and creates a JAR file in the target directory.

Step 7: Start Coding

- You’re now ready to start developing with Vert.x. Create your Verticle class, which represents a component in a Vert.x application, and implement the necessary logic.

Step 8: Run the Application

To run a Vert.x application, you can use the following command in your project directory (assuming you’ve already built the project with Maven):

java -jar target/my-vertx-app-1.0-SNAPSHOT.jar

Replace my-vertx-app-1.0-SNAPSHOT.jar with the actual name of your JAR file.

Congratulations! You have successfully set up your development environment for Vert.x. You can now start building reactive applications using the Vert.x toolkit. Remember to refer to the Vert.x documentation and explore its rich set of features and APIs to harness its full potential. Happy coding!

Section 3: Understanding Vert.x Core Concepts

To effectively work with Vert.x, it’s crucial to understand its core concepts. Let’s explore the key concepts of Vert.x:

- Verticles:

- Verticles are the building blocks of a Vert.x application. They represent individual components or units of work that run concurrently within the Vert.x ecosystem.

- Verticles are lightweight and can be single-threaded or multi-threaded, depending on the configuration. They communicate with each other through the event bus.

- Verticles can handle various tasks, such as handling HTTP requests, processing messages, accessing databases, or performing background tasks.

- Vert.x provides different types of verticles, including standard verticles, worker verticles (for CPU-intensive tasks), and periodic verticles (for scheduled tasks).

- Event Bus:

- The event bus is a powerful communication mechanism provided by Vert.x that allows different verticles to exchange messages asynchronously.

- Verticles can publish messages to the event bus, and other verticles can subscribe to receive those messages based on different patterns or addresses.

- The event bus enables loose coupling between verticles, making it easy to build distributed and scalable systems.

- Vert.x provides different messaging patterns, including publish/subscribe and point-to-point messaging, which can be used with the event bus.

- Asynchronous Programming Model:

- Vert.x promotes an asynchronous programming model, which is fundamental to building reactive applications.

- Asynchronous programming allows non-blocking execution of tasks, enabling applications to handle high loads and concurrency efficiently.

- Vert.x APIs are designed to work asynchronously, allowing developers to write non-blocking code that can scale well.

- Callbacks, futures/promises, and reactive streams are common patterns used in Vert.x to handle asynchronous operations.

- Reactive Streams Integration:

- Vert.x integrates seamlessly with Reactive Streams, a standard for asynchronous stream processing with non-blocking backpressure.

- Reactive Streams provide a set of interfaces and protocols for building reactive applications that can handle backpressure and efficiently process streams of data.

- Vert.x includes support for Reactive Streams, enabling developers to use reactive streams implementations like RxJava, Reactor, or CompletableFuture seamlessly within Vert.x applications.

Understanding these core concepts is essential for harnessing the power of Vert.x. With Verticles, the Event Bus, Asynchronous Programming, and Reactive Streams, you can build scalable, responsive, and high-performance applications. By leveraging these concepts, you can create loosely coupled, concurrent systems that efficiently handle large workloads and enable seamless communication between components.

Section 4: Building a Simple Vert.x Application

To build a simple Vert.x application, we will go through the process of creating a basic Verticle, deploying it, and running the application. Follow these steps:

Step 1: Create a Maven Project

- If you haven’t already set up a Maven project, follow the instructions in the “Setting Up the Development Environment” section to create a new Maven project or use an existing one.

Step 2: Add Vert.x Dependency

- Open the

pom.xml file of your Maven project and add the Vert.x dependency within the <dependencies> section:

<dependency>

<groupId>io.vertx</groupId>

<artifactId>vertx-core</artifactId>

<version>4.1.1</version>

</dependency>

- This adds the Vert.x core dependency to your project.

Step 3: Create a Verticle

- In your project, create a new Java class representing your Verticle. For example, you can create a class named

MyVerticle.

- Make sure your class extends

io.vertx.core.AbstractVerticle.

- Override the

start() method to define the behavior of your Verticle when it is deployed. For simplicity, let’s print a message to the console:

public class MyVerticle extends AbstractVerticle {

@Override

public void start() {

System.out.println("MyVerticle has been deployed!");

}

}

Step 4: Deploy and Run the Verticle

- In your main application class (e.g.,

App.java), deploy the MyVerticle by creating a Vertx instance and using the deployVerticle() method:

import io.vertx.core.Vertx;

public class App {

public static void main(String[] args) {

Vertx vertx = Vertx.vertx();

vertx.deployVerticle(new MyVerticle());

}

}

Step 5: Run the Application

- Compile and run the application using your preferred method (e.g., Maven command or an integrated development environment).

- Once the application starts, you should see the message “MyVerticle has been deployed!” printed in the console.

Congratulations! You have successfully built a simple Vert.x application. This example demonstrates the basic structure of a Verticle and how to deploy it using the Vertx instance. You can further enhance your application by adding more Verticles, handling HTTP requests, or integrating with other technologies using the Vert.x APIs.

Section 5: Scaling Vert.x Applications

Scaling Vert.x applications is crucial to handle increased workloads and ensure high availability. Vert.x provides several mechanisms for scaling applications. Let’s explore two important aspects of scaling Vert.x applications: Vert.x Clustering and High Availability.

- Vert.x Clustering:

- Vert.x clustering allows you to run multiple Vert.x instances across multiple nodes to distribute the load and handle high concurrency.

- Clustering is achieved through a built-in event bus, which enables communication between different Vert.x instances running on different nodes.

- When multiple Vert.x instances are clustered, they form a distributed event bus network, allowing verticles to communicate seamlessly.

- To enable clustering, you need to configure your Vert.x instances to join the same cluster by specifying a cluster manager implementation.

- Vert.x provides different cluster manager implementations, such as Hazelcast, Apache Ignite, Infinispan, and more, that handle the management and coordination of the clustered instances.

- By leveraging clustering, you can horizontally scale your Vert.x application by adding more nodes to the cluster, enabling it to handle higher workloads and providing fault tolerance.

- High Availability:

- High availability ensures that your Vert.x application remains operational even in the face of failures.

- Vert.x provides features and best practices to achieve high availability in different scenarios:

- Circuit Breaker Pattern: Vert.x offers a built-in circuit breaker pattern implementation, allowing you to protect your application from cascading failures when dealing with remote services. It helps to manage failure thresholds, timeouts, and retries.

- Reactive Streams and Backpressure: Vert.x integrates with Reactive Streams, which enables efficient handling of streams of data with non-blocking backpressure. This helps to prevent overloading downstream systems and ensures resilience and stability in the face of varying workloads.

- Fault Tolerance: Vert.x provides mechanisms to handle failures and recover from them. For example, when a verticle fails, Vert.x can automatically redeploy it to ensure that the system continues running smoothly. Additionally, you can leverage cluster-wide shared data structures to maintain the state and recover from failures.

- Monitoring and Alerting: Implement monitoring and alerting mechanisms to detect and respond to any anomalies or failures in your Vert.x application. Utilize logging, metrics, and monitoring tools to gain insights into the application’s health and performance.

By leveraging Vert.x clustering and implementing high availability practices, you can ensure that your application scales effectively and remains resilient to failures. These mechanisms enable your application to handle increased workloads, distribute the load across multiple nodes, and provide fault tolerance and automatic recovery. Proper monitoring and alerting help you identify and address any issues promptly, ensuring the smooth operation of your Vert.x application.

Section 6: Integrating with Other Technologies

Vert.x offers seamless integration with various technologies and frameworks, allowing you to leverage existing tools and services in your applications. Here are some common integration points for Vert.x:

- Database Integration:

- Vert.x provides asynchronous clients and connectors for interacting with different databases, both SQL and NoSQL.

- For example, you can use the Vert.x JDBC client to connect to relational databases like MySQL, PostgreSQL, or Oracle.

- Vert.x also provides clients for popular NoSQL databases like MongoDB, Redis, and Apache Cassandra.

- These database clients allow you to perform asynchronous database operations efficiently and integrate database access with other Vert.x components.

- Messaging Systems:

- Vert.x seamlessly integrates with messaging systems, enabling you to build event-driven and distributed applications.

- Vert.x provides a unified API for working with message brokers such as Apache Kafka, RabbitMQ, and ActiveMQ.

- You can use the Vert.x event bus to publish and consume messages from these brokers, enabling communication between different parts of your system or integrating with external systems.

- Reactive Streams:

- Vert.x integrates with Reactive Streams, which is a specification for asynchronous stream processing with non-blocking backpressure.

- By leveraging Reactive Streams implementations like RxJava, Reactor, or CompletableFuture, you can easily integrate reactive libraries and frameworks into your Vert.x applications.

- This integration allows you to handle streams of data efficiently and apply reactive patterns across your application.

- Service Discovery:

- Vert.x provides a service discovery mechanism that allows services to discover and interact with each other dynamically.

- With service discovery, you can register services with associated metadata and retrieve them by name or other attributes.

- This feature is especially useful in microservices architectures, where services need to discover and communicate with each other without hard-coded dependencies.

- Web Technologies:

- Vert.x offers a powerful set of APIs and tools for building web applications and APIs.

- It integrates with web technologies like WebSocket, HTTP, and event-driven server-sent events.

- You can use the Vert.x Web API to handle HTTP requests, build RESTful APIs, serve static files, and implement routing and middleware functionalities.

- Additionally, Vert.x provides integration with popular web frameworks like Spring WebFlux and Express.js, allowing you to leverage their capabilities within your Vert.x applications.

- Authentication and Authorization:

- Vert.x integrates with authentication and authorization mechanisms, enabling secure access control to your applications.

- It supports various authentication methods, including basic authentication, OAuth 2.0, and JWT (JSON Web Tokens).

- Vert.x also provides integration with popular identity providers like Keycloak, Okta, and Google Sign-In.

These are just a few examples of the technologies that can be integrated with Vert.x. Vert.x’s modular and flexible architecture allows you to integrate with a wide range of tools and services, enabling you to leverage existing solutions and build powerful, feature-rich applications. When integrating with external technologies, refer to the Vert.x documentation and specific integration guides for detailed instructions and best practices.

Section 7: Unit Testing Vert.x Applications

Unit testing is an essential practice in software development, and Vert.x provides support for writing unit tests for your Vert.x applications. Let’s explore how you can effectively unit test your Vert.x applications:

- Testing Verticles:

- Verticles are the building blocks of a Vert.x application. You can write unit tests to validate the behavior of individual verticles.

- To test a verticle, create a test class for it and use a testing framework like JUnit or TestNG.

- Use the Vert.x Test API to set up and execute your tests. The Vert.x Test API provides utilities for creating Vert.x instances, deploying verticles, and simulating events.

- You can simulate events on the event bus, mock dependencies, and verify the expected behavior of your verticle.

- Mocking Dependencies:

- When unit testing a verticle, you may need to mock external dependencies such as databases, services, or message brokers.

- Use mocking frameworks like Mockito or EasyMock to create mock objects for your dependencies.

- Mock the behavior of these dependencies to simulate different scenarios and ensure the correct interaction between the verticle and its dependencies.

- Asynchronous Testing:

- Vert.x is designed for asynchronous programming, and your tests need to handle asynchronous operations appropriately.

- Use the Vert.x Test API to write assertions for asynchronous code. For example, you can use the

await() method to wait for asynchronous operations to complete.

- Use the

async() method to inform the test framework that the test is asynchronous and provide a completion handler to signal the completion of the test.

- Dependency Injection:

- Vert.x supports dependency injection, and you can use it to improve the testability of your code.

- Use a dependency injection framework like Google Guice or Spring to manage dependencies in your verticles.

- In your unit tests, you can provide mock or test-specific implementations of dependencies to ensure controlled testing environments.

- Integration Testing:

- In addition to unit tests, you may also want to perform integration tests to validate the interactions between different components of your Vert.x application.

- Integration tests involve deploying multiple verticles and simulating real-world scenarios.

- Use the Vert.x Test API and tools like the embedded Vert.x instance or containers like Docker to set up integration test environments.

- You can also use tools like WireMock to mock external dependencies and simulate network interactions.

Remember to follow best practices for unit testing, such as testing individual units in isolation, focusing on behavior rather than implementation details, and keeping tests concise and readable.

Vert.x provides a comprehensive testing framework and utilities to support effective unit testing of your applications. By writing unit tests, you can ensure the correctness of your code, detect bugs early, and maintain the quality and stability of your Vert.x applications.

Section 8: Deploying Vert.x Applications

Deploying Vert.x applications involves preparing your application for deployment and choosing the appropriate deployment options. Here are the key steps to deploy a Vert.x application:

- Package Your Application:

- Ensure that your Vert.x application is properly packaged for deployment.

- Create an executable JAR file that includes all the necessary dependencies.

- You can use build tools like Maven or Gradle to package your application, which will create a self-contained JAR file.

- Choose Deployment Options:

- Vert.x provides multiple deployment options based on your requirements and the target environment.

- Standalone Deployment: You can deploy your Vert.x application as a standalone JAR file on a server or a virtual machine.

- Containerized Deployment: Package your application as a Docker image and deploy it to container orchestration platforms like Kubernetes.

- Serverless Deployment: If you want to leverage serverless architectures, you can deploy your Vert.x application to platforms like AWS Lambda or Azure Functions.

- Configuration Management:

- Consider how you manage configuration for your Vert.x application in different deployment environments.

- Externalize configuration using configuration files, environment variables, or configuration servers like Consul or Spring Cloud Config.

- Make sure your application can read and utilize the configuration from the chosen configuration source.

- Scaling and Load Balancing:

- When deploying your application in a production environment, consider how to scale and load balance your Vert.x instances.

- Vert.x clustering allows you to run multiple instances of your application across different nodes, distributing the load and ensuring fault tolerance.

- Use load balancers like Nginx or Apache HTTP Server to distribute incoming traffic across multiple Vert.x instances.

- Monitoring and Logging:

- Set up monitoring and logging for your deployed Vert.x application to gather insights into its performance, health, and potential issues.

- Use monitoring tools like Prometheus, Grafana, or the Vert.x Metrics API to collect and visualize application metrics.

- Configure proper logging to capture important events, errors, and debugging information for troubleshooting purposes.

- Continuous Integration and Deployment (CI/CD):

- Automate your deployment process using CI/CD pipelines to streamline and ensure consistent deployments.

- Integrate your Vert.x application with CI/CD tools like Jenkins, GitLab CI, or AWS CodePipeline to automatically build, test, and deploy your application.

By following these steps and considering the deployment options, configuration management, scaling, monitoring, and automation, you can successfully deploy your Vert.x application and ensure its availability, scalability, and maintainability in various environments.

Section 9: Monitoring and Debugging Vert.x Applications

Monitoring and debugging Vert.x applications are crucial for maintaining their performance, identifying issues, and ensuring their smooth operation. Here are some approaches and tools you can use for monitoring and debugging Vert.x applications:

- Logging:

- Utilize logging frameworks like Log4j, SLF4J, or Vert.x’s built-in logging capabilities to capture important events, errors, and debugging information.

- Configure logging levels appropriately to balance the level of detail and performance impact.

- Use log aggregation tools like ELK Stack (Elasticsearch, Logstash, Kibana) or Splunk to collect, search, and visualize log data.

- Metrics and Health Checks:

- Vert.x provides a Metrics API that allows you to collect various performance metrics about your application, such as CPU usage, memory consumption, event loop utilization, and request/response rates.

- Integrate with monitoring tools like Prometheus, Grafana, or DataDog to collect and visualize these metrics in real-time dashboards.

- Implement health checks in your application to periodically assess its overall health and availability. Expose endpoints that can be probed by external monitoring systems.

- Distributed Tracing:

- Distributed tracing helps you understand and debug the flow of requests across different components of your Vert.x application, especially in microservices architectures.

- Tools like Jaeger, Zipkin, or OpenTelemetry can be integrated with Vert.x to provide distributed tracing capabilities.

- Instrument your code with tracing annotations or APIs to track requests as they pass through different verticles and external services.

- Request Logging and Monitoring:

- Log and monitor incoming requests to your Vert.x application to gain insights into their performance and identify potential bottlenecks.

- Use tools like Apache HTTP Server or Nginx as reverse proxies to capture request logs and enable advanced logging features.

- Implement request-level metrics and monitoring to track request/response times, error rates, and throughput.

- Debugging Techniques:

- Vert.x supports remote debugging, allowing you to attach a debugger to running Vert.x instances.

- Enable remote debugging by adding the appropriate JVM arguments to your application’s startup script or configuration.

- Use an Integrated Development Environment (IDE) like IntelliJ IDEA, Eclipse, or Visual Studio Code with the Vert.x plugin to connect and debug your running application.

- Application Performance Monitoring (APM) Tools:

- Consider using Application Performance Monitoring (APM) tools like New Relic, AppDynamics, or Dynatrace to gain deeper insights into your Vert.x application’s performance.

- These tools provide end-to-end visibility, capturing detailed transaction traces, database queries, external service calls, and resource utilization.

Remember to monitor your Vert.x applications in both development and production environments. Understand the performance characteristics of your application and establish baselines to identify deviations and potential issues.

By combining logging, metrics, distributed tracing, request logging, debugging techniques, and APM tools, you can effectively monitor and debug your Vert.x applications, ensuring optimal performance, identifying and resolving issues quickly, and providing a smooth user experience.

Section 10: Conclusion

In conclusion, Vert.x is a powerful and versatile toolkit for building reactive, event-driven applications that can handle high concurrency and scale effectively. In this tutorial, we covered various aspects of Vert.x development, starting from setting up the development environment to deploying and monitoring Vert.x applications.